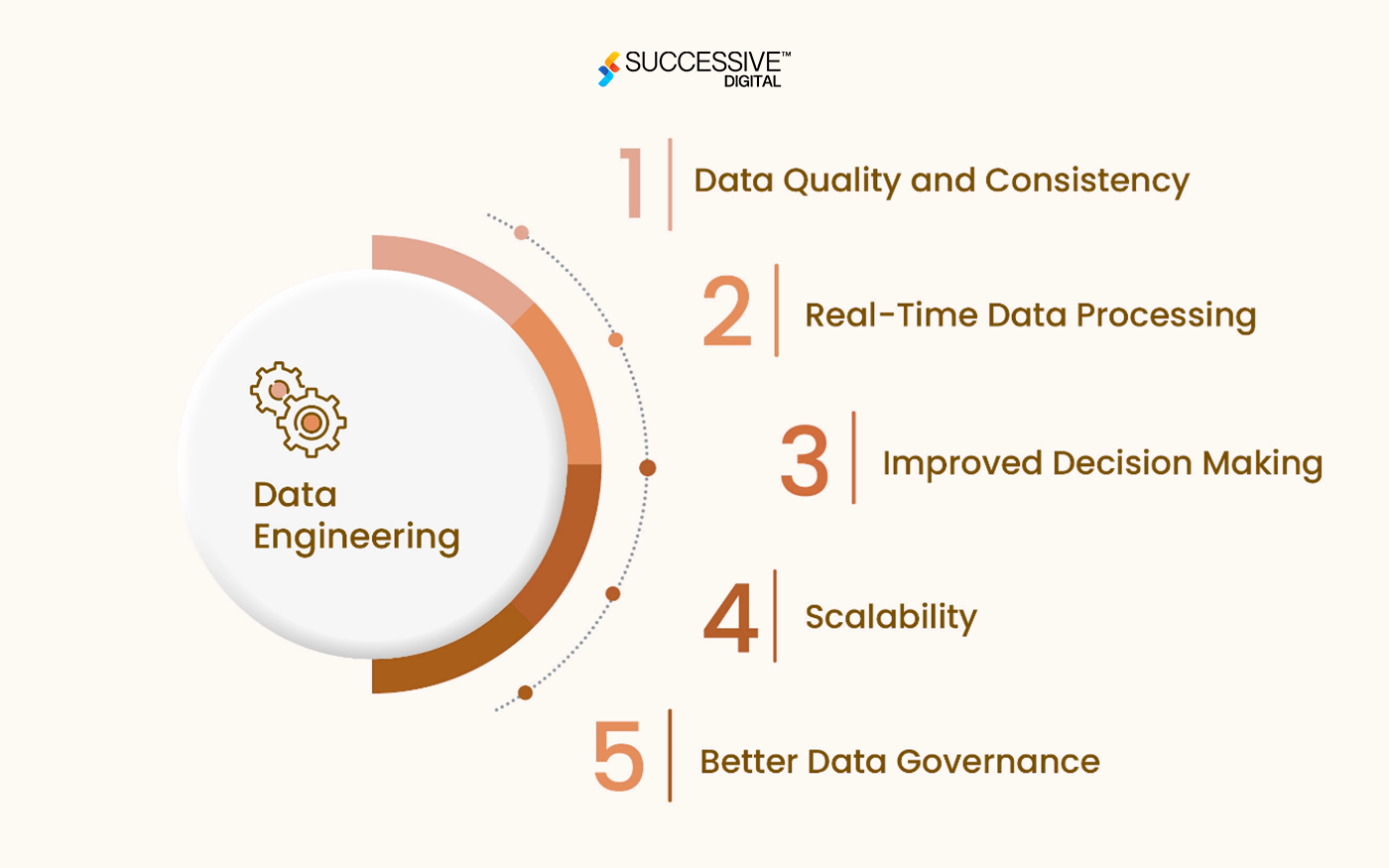

Data engineering emerges as the foundational pillar on which companies build their data-driven strategies in today’s digital transformation landscape. With the exponential growth of information, from transaction record-based to unstructured data streams, the discipline of data engineering process becomes essential to navigate the complexity of modern data ecosystems.

Data engineering technologies encompass a range of applications, including the collection, storage, processing, and transformation of statistics into actionable insights. At its core, data engineering is the backstage orchestrator that ensures that information will flow from different sources to analytics platforms easily and that they can make informed decisions for businesses.

In a dynamic data engineering environment, professionals are tasked with developing robust infrastructure and efficient data pipelines to promote flexible solutions that fit the ever-evolving data-driven environment. Collaboration with researchers, stakeholders, and experienced companies that deliver data engineering technologies is paramount for professionals to incorporate within today’s businesses’ needs into tangible reality solutions—competitive-related innovation and inspiration for profit.

This blog will explore the complexities of data engineering concepts, core components, key technologies, use cases, and real-world applications.

Core Components of Data Engineering

-

Data Quality and Analysis:

Data quality and analysis are essential components of data engineering, ensuring that information is accurate, reliable, and robust over the complete data engineering lifecycle. Data engineers verify records to identify and correct errors, discrepancies, and inconsistencies in data. Implementation of cleaning and enrichment techniques includes data appearance profiling, anomaly detection, and information lineage assessment to happily test data and identify areas for improvement.

Satisfactory metrics: By monitoring KPIs, data engineers can verify that the estimates meet the required criteria and are theoretically suitable for use in the evaluation and selection process.

-

Metadata Management:

Metadata management involves capturing, storing, and coping with metadata—statistics describing data assets, size, family, and usage. Data engineers use metadata repositories and catalogs to record metadata and facts throughout the business channels. Capturing technical metadata (e.g., data schema, data types) and enterprise metadata (e.g., data lineage, data ownership) to provide information discovery, governance, and collaboration that provides a centralized view of content and tools like Atlas and Colibra enables metadata management to index and search metadata, track data lineage, and ensure compliance with records management policies.

By effectively coping with metadata, data engineers can enable audit discovery, family, and governance, allowing users to search for statistics hassle-free and capture and make informed choices based on data trust.

-

Scalability and Efficiency:

Scalability and efficiency are essential considerations in data engineering concepts, ensuring that the data system can cope with increasing volumes of data and determine analytical workloads so the data engineers can configure scalable architecture, design strategies like sharding, partitioning, caching, etc., to distribute data processing and storage across clusters and nodes.

This allows organizations to scale their data infrastructure horizontally to add more sources as needed to handle evolving needs. In addition, data engineers can optimize query performance by optimizing database structures, optimizing indexing methods, and enforcing catch mechanisms to reduce latency and improve responsiveness.

-

Cost Efficiency and Resource Efficiency:

Cost efficiency and resource efficiency are critical concerns in the data engineering process, ensuring that enterprises can maximize their data investment while reducing operating costs. Data engineers can introduce powerful storage-processing solutions, using cloud-native technology and resource management ways to consume valuable resources, employ operational efficiency, and lower transaction costs. This includes provisioning resources dynamically based on workload requirements, accurate modeling to match business requirements, and aggressively allocating resources in response to changing circumstances.

Data engineers can monitor resource utilization, perform cost estimates, and analyze costs to identify opportunities for efficiency and economic savings by proactively analyzing overall spending and resource management, helping organizations achieve data goals while staying within budget constraints.

-

Data Governance and Security:

This includes establishing encryption techniques and auditing capabilities to link sensitive records and access controls to ensure data privacy and confidentiality. GDPR, HIPAA, CCPA, and other data governance frameworks define information management and security requirements, directing data engineers to install robust security measures into their data engineering process.

Key Technologies in Data Engineering

Data engineering relies on various technologies to efficiently manage, organize, and analyze multiple volumes of data. At the core of the data engineering process lies distributed computing frameworks such as Apache Hadoop and Apache Spark, which allow extensive scalable data to be stored and processed in clusters of machines. These frameworks are complemented by streaming platforms such as Apache Kafka and Apache Flink, facilitating the building of real-time data pipelines for continuous data ingestion and analysis. Orchestration tools Apache Airflow plays a crucial role in automating and managing complex processes, while cloud technologies like AWS, Google Cloud Platform, and Microsoft Azure offer a variety of services tailored to the technical needs of businesses, such as storage, processing, and analytics solutions. Using traditional databases and modern information repositories, Data Lakes provides storage of unstructured and semi-structured data. Containerization and orchestration technologies like Docker and Kubernetes make deploying and scaling compute engineering systems easier, while workflow management tools, including Luigi and Azkaban organize and make monitoring of data easier.

Furthermore, data integration with ETL plays a crucial role in extracting, transforming, and loading data from various assets, ensuring accuracy and reliability in data pipelines. Machine recognition frameworks are increasingly incorporating record pipelines for predictive analytics and extracting insights from data while streaming analytics technologies enable real-time processing and analysis. Data governance and security solutions ensure compliance and protect sensitive data throughout the data lifecycle, providing a solid foundation for robust and reliable data engineering practices.

Best Practices in Data Engineering

- Scalability and Performance Design: Design data pipelines and infrastructure that can scale horizontally to handle increasing data volumes and accommodate growing workloads.

- Automate the Data Pipeline: Use automation and orchestration tools such as Apache Airflow, Luigi, or Prefect to schedule, display, and manage the data engineering process, reducing routing effort and ensuring reliability.

- Ensure Data Quality and Consistency: Implement information management, cleaning, and analytics procedures to ensure the integrity, completeness, and accuracy of data in systems and pipelines.

- Optimize Storage and Processing: Use splitting, indexing, and compression techniques to improve storage and performance, reduce costs, and increase productivity.

- Implement Data Security Measures: Disclose security threats and factors to protect sensitive information from unauthorized or illegal access.

- Encrypt records that are readily available and in transit.

- Promote access to authoritative authentication mechanisms.

- Embrace Cloud-Native Architecture: Use cloud-native technologies and offerings to build scalable, flexible, and cost-effective data engineering concepts that can adapt to changing business and enterprise needs and market conditions globally.

Real-World Applications of Data Engineering

E-commerce and Retail:

Analyze customer behavior, purchase history, and inventory data to customize recommendations, provide the best pricing options, and anticipate demand.

Finance and Banking:

Detecting fraud, analyzing potential credit scores, optimizing investments, and using predictive analytics and machine recognition patterns trained on historical transaction logs.

Health and Life Sciences:

Digital health information, clinical imaging records, and genomic statistical analysis to improve outcomes for affected individuals, expand personalized treatment plans, and provide acceleration to the drug discovery process.

Manufacturing and Supply Chain:

Monitor manufacturing processes, analyze sensor statistics, and optimize supply chain operations to improve efficiency, reduce downtime, and minimize overall operating costs.

Media and Entertainment:

Audience engagement, consumption strategies, and social media interaction analysis to optimize content signals and target ads, and provide content distribution.

Future Trends in Data Engineering

-

Real-Time Data Processing:

Increasing demand for real-time analytics and insights will force businesses to adopt streaming data processing technology such as Apache Kafka and Apache Flink to create real-time data pipelines and applications.

-

Machine Learning Technologies:

The convergence of statistical technologies and machine recognition will generate the essential functions and tools to manage machine learning workflows, model training, and deployment at scale.

-

Edge Computing:

The proliferation of Internet of Things (IoT) devices and edge computing platforms will require data engineers to configure and deploy distributed data services and analytics solutions that can operate at the network edge.

-

DataOps and MLOps:

Adopting DataOps and MLOps practices will streamline the development, implementation, and use of data pipelines and facilitate machine learning models to enable improved collaboration and agility with information and ML teams.

-

Data Governance and Compliance:

Increased awareness of data privacy, security, and compliance will drive investment in data engineering concepts, tools, and processes to ensure responsible and ethical use of data.

With data engineering technologies, organizations can build robust systems of data and unleash the power of all their data assets in the digital age. Whether you are an experienced data engineer or new to the field, this comprehensive guide provides a powerful framework for data engineering concepts to navigate the challenging digital landscape and win the ride.